If All Else Fails, Try Brute Force…

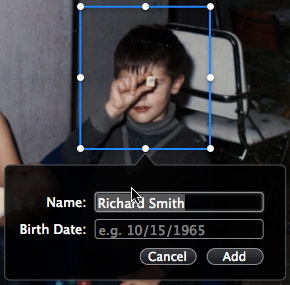

March 26th, 2009A while ago, I posted on how happy I was with the new People Picker for MemoryMiner 2.0: I spent a lot of time refining it, since this is something that is at the very core of using the application. About a month ago, I took the pan & zoom engine that I had rewritten using Core Animation and married it to the annotation view. This is great because you get immediate feedback as to the best place for creating a selection marker.

Things were great… Until.

Turns out mixing layer hosting views (i.e. the pan & zoom) with layer backed views (i.e. the People Picker view) can lead to some nasty side effects, if the layer backed view happens to contain any NSScrollViews, which of course is the case with the People Picker, since it contains a table view with potentially a lot of items. To show what I mean, have a look at the screen movie below, and notice how the the table items fade in and out as the scrolling takes place:

Horrid, no?

By comparison, here’s another screen movie showing the original behavior, before I was mixing layer hosting and layer backed views:

The fundamental problem is, you have no control over the animation that is automatically created to fade in the sections of the scroll view. Bummer.

Over the course of a few weeks, I spent more time than I care to think about asking the smartest people I know how to get around this problem. I came up empty. Finally, a request to Apple DTS returned the suggestion of re-writing the table view using CALayers: thanks, but no thanks!

In the end, I ended up punting, and letting the Window Server deal with the problem. That’s right: I created a transparent window to host the view with the scrolling NSTableView, and added it as a child window of my application window. It took some fiddling to get the positioning right, and to deal with a number of other issues related to mouse tracking, but it all works now.

A real pain in the ass, but dare I say worth it. Sometimes a little brute force does the trick.